Linear/Nonlinear Logic and Linear Algebra

Benton’s Linear/Nonlinear Logic has models in (lax) monoidal adjunctions between monoidal and cartesian categories.

The tasks performed in GPU kernels often deal with linear and nonlinear maps between real vector spaces.

I want to take this and run with it: let’s see what it looks like to define some mixed linear/nonlinear functions in a linear/nonlinear type theory. This feels to me so obvious that it must be written down somewhere already. In the literature there is work that gets close (and I’ll do some comparisons at the end), but none are quite what I want. Please let me know if I’ve missed something.

Edit: It’s been pointed out to me that the type theory I’ve put together is almost exactly the Enriched Effect Calculus, though I still haven’t seen the semantics in ordinary vector spaces discussed anywhere. And see my follow-up!

The Setting

To be totally explicit, what we want to capture is:

- \mathrm{Set} is cartesian closed with finite coproducts,

- \mathrm{Vec}_{\mathbb{R}} is symmetric monoidal closed with finite biproducts.

- \mathrm{Vec}_{\mathbb{R}} is powered and copowered over \mathrm{Set}, and in particular there is a lax monoidal adjunction given by \begin{align*} \mathsf{F}- &:\equiv- \odot \mathbb{R} \\ \mathrm{U}- &:\equiv\mathrm{Vec}_{\mathbb{R}}(\mathbb{R}, -) \end{align*} (and \mathsf{F} is automatically also strong monoidal by “doctrinal adjunction”).

Judgements

There are different presentations of the logic, some which merge the \mathcal{L} and \mathcal{C} rules. I want to keep things separated to avoid (my own) confusion. So we have two term judgements, one cartesian and one linear.

\Gamma \vdash x : X \qquad \qquad \Gamma \mid \Lambda \vdash a :: A

The context of the linear judgement has two “zones”, with a term such as the above interpreted as a morphism a : \mathsf{F}\Gamma \otimes \Lambda \to A. We don’t need “bunches” in the sense of bunched logics, because bunching only arises when \to and \multimap exist on the same mode/category, which is not the case here.

I’ll be consistent about using serif and : for nonlinear

definitions, and sans-serif and :: for linear definitions.Because

linear is bouba and non-linear is kiki.

Tensor and Unit Type

Standard rules:

I will use ℝ to refer to the monoidal unit, although nothing in this post really requires it to be the real numbers as opposed to an arbitrary ring. The syntax for the introduction rule \mathsf{e}:: ℝ is chosen so that we think of \mathsf{e} as an “abstract basis vector”. It is tempting to use 1 here, but 1 looks more like a constant which can be dropped into any term in any location, which is certainly not the case for \mathsf{e}.

In most places, I’m going to write functions that involve these eliminators using pattern matching notation rather than mentioning the eliminators explicitly. Sue me!

Power, Copower and Hom-set

Our first departure from the ordinary presentation of LNL is to generalise the \mathsf{F} and \mathrm{U} adjunction to the copower and “external” hom adjunction

First the copower, a kind of mixed pairing of a nonlinear and linear

type.This kind of mixed paring isn’t novel, a dependent version of

this was used in the LNL_D theory of Krishnaswami, Pradic and Benton

(cited below).

We will use this type former to define ℝ^{\bold{n}} :\equiv\bold{n}

\odot ℝ.Though the classical notation ℝ^{\bold{n}} does suggest

using the power here…

Any time we feel the need to say “pick a

basis for A”, that is a sign we should be using X \odot ℝ instead

(where X can certainly be infinite).

This copower has two different right adjoints, depending on which side

of the \odot we transpose. If we transpose the linear side, we get

the nonlinear type of homomorphisms between linear types.Distinct

from the linear type \multimap of homomorphisms between linear types.

From the above operations we can derive the functors \mathsf{F} and \mathrm{U} exactly as suggested earlier: \mathsf{F}:\equiv- \odot \mathbb{R} and \mathrm{U}:\equiv\mathrm{Vec}_{\mathbb{R}}(\mathbb{R}, -). The derived rules are slightly different to what you see in other presentations of LNL, but I think it will help to be explicit about what’s happening to the unit type ℝ involved in both of these constructions.

For the other right adjoint, if we transpose the nonlinear side of

\odot we get the power X \pitchfork B. This is the linear type

given by the X-fold product of B with itself.Where does this

pitchfork notation come from originally?

To summarise the four function types we have now:It seems harmless

to have all of these as independent type formers, though you could

define some of them in terms of the others, like \mathrm{Vec}_{\mathbb{R}}(A, B) \cong

\mathrm{U}(A \multimap B).

| Type Former | Domain | Codomain | Result |

|---|---|---|---|

| - \to - | \mathcal{C} | \mathcal{C} | \mathcal{C} |

| - \multimap- | \mathcal{L} | \mathcal{L} | \mathcal{L} |

| \mathrm{Vec}_{\mathbb{R}}(-,-) | \mathcal{L} | \mathcal{L} | \mathcal{C} |

| - \pitchfork - | \mathcal{C} | \mathcal{L} | \mathcal{L} |

Accordingly, there are four different ways a variable can be bound: one for each function type. To help distinguish, I’ve used \lambda x when binding a nonlinear variable, so for \to and \pitchfork, and \partial a when binding a linear variable, so for \multimap and \mathrm{Vec}(-,-).

Boolean

We’ll need to be able to eliminate nonlinear inductive types into the

linear term judgement.In Mike’s terminology this is asking that the

mode morphism from nonlinear types into linear ones is “transparent”.

(I think.)

This should be derivable because \mathsf{F} is a left

adjoint, but it’s convenient to just have it as a rule. For example,

for \mathrm{Bool}:

This kind of nonlinear interaction is a change in perspective compared to other applications of LNL. I don’t want to think of the nonlinear world as a “metatheory” for building linear maps (like quantum circuits, say), but as something that can be jumbled together with the linear type formers.

Additive Products

The standard rules for additive products:

We could also put in additive coproducts, but we’re not going to need them.

Global Additivity

This is the other feature that goes beyond the original LNL logic: we

add in global “additivity” rules for all linear types. This will

turn the products we just put in above into biproducts.And note also

that “addition is additive”, in that the linear context is not split

into two pieces by the + rule.

Besides being commutative, associative and unital, these global rules will need to interact sensibly with the other type formers:

\begin{align*} \mathsf{let} \; {\mathsf{e}} = {0} \; \mathsf{in} \; {c} &\equiv 0 \\ \mathsf{let} \; {x \otimes y} = {0} \; \mathsf{in} \; {c} &\equiv 0 \\ 0 &\equiv\partial x. 0 \\ 0 &\equiv 0 \mathbin{\&}0 \\ ~\\ \mathsf{let} \; {\mathsf{e}} = {a + a'} \; \mathsf{in} \; {c} &\equiv(\mathsf{let} \; {\mathsf{e}} = {a} \; \mathsf{in} \; {c}) + (\mathsf{let} \; {\mathsf{e}} = {a'} \; \mathsf{in} \; {c}) \\ \mathsf{let} \; {x \otimes y} = {a + a'} \; \mathsf{in} \; {c} &\equiv(\mathsf{let} \; {x \otimes y} = {a} \; \mathsf{in} \; {c}) + (\mathsf{let} \; {x \otimes y} = {a'} \; \mathsf{in} \; {c}) \\ (\partial x. a) + (\partial x. a') &\equiv\partial x. (a + a') \\ (a \mathbin{\&}b) + (a' \mathbin{\&}b') &\equiv(a + a') \mathbin{\&}(b + b') \\ \end{align*}

I’m thinking of these equations as directed, so we prefer to rewrite the left sides into the right sides. (I may be missing some…)

Examples

Now let’s run the experiment. What does it feel like to write down some basic definitions in this thing?

Monoidality and Functoriality

Let’s check quickly that we get out what we think we put in. Remembering that \mathsf{F}X and \mathrm{U}A are shorthand for X \odot ℝ and \mathrm{Vec}_{\mathbb{R}}(ℝ, A) respectively:

\begin{align*} &\mathsf{mon}_\mathsf{F}:: \mathsf{F}X \otimes \mathsf{F}Y \multimap\mathsf{F}(X \times Y) \\ &\mathsf{mon}_\mathsf{F}((x \odot \mathsf{e}) \otimes (y \odot \mathsf{e})) :\equiv(x, y) \odot \mathsf{e}\\ ~\\ &\mathsf{mon}_\mathsf{F}^{-1} :: \mathsf{F}(X \times Y) \multimap\mathsf{F}X \otimes \mathsf{F}Y \\ &\mathsf{mon}_\mathsf{F}^{-1}((x, y) \odot \mathsf{e}) :\equiv(x \odot \mathsf{e}) \otimes (y \odot \mathsf{e}) \\ ~\\ &\mathrm{mon}_\mathrm{U}: \mathrm{U}A \times \mathrm{U}B \to \mathrm{U}(A \otimes B)\\ &\mathrm{mon}_\mathrm{U}(a, b)(\mathsf{e}) :\equiv a(\mathsf{e}) \otimes b(\mathsf{e}) \\ \end{align*}

(Or in \mathrm{mon}_\mathrm{U} we could not pattern match on the unit and use the argument on one of the sides.)

It’s similarly straightforward to show that all our type formers are functorial. For purely linear or nonlinear type formers it goes exactly as normal, so I’ll just demonstrate the ones that involve both linear and nonlinear types.

\begin{align*} &\mathsf{func}_\odot :: \mathsf{F}(X \to Y) \multimap(A \multimap B) \multimap(X \odot A \multimap Y \odot B) \\ &\mathsf{func}_\odot(f \odot \mathsf{e})(h)(x \odot a) :\equiv f(x) \odot h(a) \\ ~\\ &\mathsf{func}_\pitchfork :: \mathsf{F}(Y \to X) \multimap(A \multimap B) \multimap(X \pitchfork A \multimap Y \pitchfork B) \\ &\mathsf{func}_\pitchfork(f \odot \mathsf{e})(h)(p)(y) :\equiv h(p(f(y))) \\ ~\\ &\mathrm{func}_{\mathrm{Vec}_{\mathbb{R}}} : \mathrm{Vec}_{\mathbb{R}}(D, C) \to \mathrm{Vec}_{\mathbb{R}}(A, B) \to (\mathrm{Vec}_{\mathbb{R}}(C, A) \to \mathrm{Vec}_{\mathbb{R}}(D, B)) \\ &\mathrm{func}_{\mathrm{Vec}_{\mathbb{R}}} (h, g, f)(d) :\equiv g(f(h(d))) \end{align*}

The definitions for \odot and \mathrm{Vec}_{\mathbb{R}} specialise to \mathsf{F} and \mathrm{U}:

\begin{align*} &\mathsf{func}_\mathsf{F}:: \mathsf{F}(X \to Y) \multimap(\mathsf{F}X \multimap\mathsf{F}Y) \\ &\mathsf{func}_\mathsf{F}(f \odot \mathsf{e})(x \odot r) :\equiv f(x) \odot r \\ ~\\ &\mathrm{func}_\mathrm{U}: \mathrm{Vec}_{\mathbb{R}}(A, B) \to (\mathrm{U}A \to \mathrm{U}B) \\ &\mathrm{func}_\mathrm{U}(h, a)(r) :\equiv h(a(r)) \end{align*}

Interestingly, there’s a different kind of functoriality for

\pitchfork: nonlinear functoriality on the underlying sets. This is

a genuinely different construction to the above, not just its image

under some bijection, because the provided function could nonlinearly

scramble all the elements of \mathrm{U}A.What a mess!

\begin{align*} &\mathrm{func}_\pitchfork : (Y \to X) \to (\mathrm{U}A \to \mathrm{U}B) \to \mathrm{U}(X \pitchfork A) \to \mathrm{U}(Y \pitchfork B) \\ &\mathrm{func}_\pitchfork(f, g, u)(r)(y) :\equiv g(\partial r'. u(r')(f(y)))(r) \end{align*}

I am unable to define analogous function for \odot, even though it feels like it ought to be possible (using additivity maybe).

\begin{align*} &\mathrm{func}_\odot : (X \to Y) \to (\mathrm{U}A \to \mathrm{U}B) \to \mathrm{U}(X \odot A) \to \mathrm{U}(Y \odot B) \\ &\mathrm{func}_\odot(f, g, u) :\equiv{???} \end{align*}

Biproducts

Global additivity gives A \mathbin{\&} B the universal property of the coproduct. Let’s just rename the type former to A \oplus B from this point on (though in linear logic this is usually used for the additive coproduct).

\begin{align*} &\mathsf{inl}:: A \multimap A \oplus B \\ &\mathsf{inl}(a) :\equiv a \oplus 0 \\ &\mathsf{inr}:: B \multimap A \oplus B \\ &\mathsf{inr}(b) :\equiv 0 \oplus b \end{align*}

Coproduct induction comes from addition:

\begin{align*} &\mathsf{case} :: (A \multimap C) \oplus (B \multimap C) \multimap(A \oplus B) \multimap C \\ &\mathsf{case}(r, s) :\equiv\mathsf{pr}_1(r)(\mathsf{pr}_1(s)) + \mathsf{pr}_2(r)(\mathsf{pr}_2(s)) \end{align*}

Or, if you prefer an inference rule:

Arithmetic

Multiplication corresponds to the unitor for ℝ.

\begin{align*} &\mathsf{mult} :: ℝ \otimes A \multimap A \\ &\mathsf{mult}(\mathsf{e}\otimes a) :\equiv a \\ ~\\ &\mathsf{mult} :: ℝ \otimes ℝ \multimap ℝ \\ &\mathsf{mult}(\mathsf{e}\otimes r) :\equiv r \end{align*}

In the latter case, we could do unit-induction on both sides or just keep it one-sided. We can derelict multiplication to a nonlinear map, using the same idea as \mathrm{mon}_\mathrm{U}:

\begin{align*} &\mathrm{mult} : \mathrm{U}ℝ \times \mathrm{U}ℝ \to \mathrm{U}ℝ \\ &\mathrm{mult}(a, b) :\equiv\partial \mathsf{e}. \mathsf{mult}(a(\mathsf{e}) \otimes b(\mathsf{e})) \end{align*}

Nonlinearly, we have 1 : \mathrm{U}ℝ defined as the identity homomorphism 1 :\equiv\partial r. r.

We certainly have 0 :: ℝ linearly because we put zero in as a rule, from which we can define \partial r. 0 : \mathrm{U}ℝ. Addition is not bilinear, so the best we can hope for is a map \mathrm{U}ℝ \times \mathrm{U}ℝ \to \mathrm{U}ℝ, which we can define using global additivity.

\begin{align*} &\mathrm{add} : \mathrm{U}ℝ \times \mathrm{U}ℝ \to \mathrm{U}ℝ \\ &\mathrm{add}(a, b) :\equiv\partial r. a(r) + b(r) \end{align*}

At this point it would be sensible to check that the ring axioms hold for \mathrm{U}ℝ, using the equations we put in for +.

Matmul

Let’s suppose we have (nonlinear) finite types \bold{n}, which we can pattern match on and manipulate as normal. Euclidean spaces ℝ^\bold{n} are defined by ℝ^\bold{n} :\equiv\bold{n} \odot ℝ, or in other words, \mathsf{F}\bold{n}. We can define the dot product as a linear map, using case analysis on the nonlinear Boolean value of i = j.

\begin{align*} &\mathsf{dot} :: ℝ^\bold{n} \otimes ℝ^\bold{n} \multimap ℝ \\ &\mathsf{dot}((i \odot r) \otimes (j \odot s)) :\equiv\mathsf{if}\;{i=j}\;\mathsf{then}\;{\mathsf{mult}(r, s)}\;\mathsf{else}\;{0} \end{align*}

Or, if you want to be edgy:In the special case of X \odot ℝ, we

could write the term/pattern (i \odot \mathsf{e}) as \mathsf{e}_i: it really

is like we’re pattern-matching on basis vectors. But I’ll resist doing

that in this post, to make it easier to remember that it’s really

\odot.

\begin{align*} &\mathsf{dot}((i \odot \mathsf{e}) \otimes (j \odot \mathsf{e})) :\equiv\mathsf{if}\;{i=j}\;\mathsf{then}\;{\mathsf{e}}\;\mathsf{else}\;{0} \end{align*}

We are usually handed two separate vectors nonlinearly, and like before we can handle this by dereliction.

\begin{align*} &\mathrm{dot} : \mathrm{U}ℝ^\bold{n} \times \mathrm{U}ℝ^\bold{n} \to \mathrm{U}ℝ \\ &\mathrm{dot}(u, v) :\equiv\partial \mathsf{e}. \mathsf{dot}(u(\mathsf{e}) \otimes v(\mathsf{e})) \end{align*}

These definitions generalise easily to matrix multiplication, by functoriality of \otimes. This is the place where the linear/nonlinear logic really shines, so let’s hope there’s a lot of this.

\begin{align*} &\mathsf{matmul} :: ℝ^\bold{m} \otimes ℝ^\bold{n} \otimes ℝ^\bold{n} \otimes ℝ^\bold{k} \multimap ℝ^\bold{m} \otimes ℝ^\bold{k} \\ &\mathsf{matmul}(v \otimes (i \odot \mathsf{e}) \otimes (j \odot \mathsf{e}) \otimes w) :\equiv\mathsf{if}\;{i=j}\;\mathsf{then}\;{v \otimes w}\;\mathsf{else}\;{0} \\ \end{align*}

Of course, because we’re already working in a theory that has linear functions, we’d much rather use those than explicit matrices wherever we can.

\begin{align*} &\mathsf{unexplode}_\multimap:: (ℝ^\bold{n} \otimes A) \multimap(ℝ^\bold{n} \multimap A) \\ &\mathsf{unexplode}_\multimap(v \otimes a)(w) :\equiv\mathsf{mult}(\mathsf{dot}(v \otimes w) \otimes a) \\ \end{align*}

It might come in handy to know that \mathsf{unexplode}_\multimap is a linear map, but of course we can also define the same thing nonlinearly:

\begin{align*} &\mathrm{unexplode}_{\mathrm{Vec}_{\mathbb{R}}} : \mathrm{U}(ℝ^\bold{n} \otimes A) \to \mathrm{Vec}_{\mathbb{R}}(ℝ^\bold{n}, A) \\ &\mathrm{unexplode}_{\mathrm{Vec}_{\mathbb{R}}}(t)(w) :\equiv\mathsf{unexplode}_\multimap(t(\mathsf{e}))(w) \end{align*}

To define the inverse of this, we’ll need to cover iteration.

Iteration

How do we iterate over a vector of real numbers? Realistically, we

probably want iteration over a vector to be a primitiveAs it is in

Futhark, for example.

, but assuming some

reasonable rules for the finite types \bold{n} we can do it by

induction.These examples do need proper nonlinear dependent types to

make sense, but hopefully you get the idea. We are doing dependent

elimination on the n : \mathbb{N} parameter of the finite type

former.

The linear isomorphism

\begin{align*} &\mathsf{cons} :: ℝ \oplus ℝ^\bold{n} \multimap ℝ^\bold{n+1} \\ &\mathsf{cons}(\mathsf{inl}(r)) :\equiv\mathsf{zero} \odot r \\ &\mathsf{cons}(\mathsf{inr}(i \odot r)) :\equiv\mathsf{suc}(i) \odot r \\ ~\\ &\mathsf{cons}^{-1} :: ℝ^\bold{n+1} \multimap ℝ \oplus ℝ^\bold{n} \\ &\mathsf{cons}^{-1}(\mathsf{zero} \odot r) :\equiv\mathsf{inl}(r) \\ &\mathsf{cons}^{-1}(\mathsf{suc}(i) \odot r) :\equiv\mathsf{inr}(i \odot r) \\ \end{align*}

suggests that we can pattern match on elements of ℝ^\bold{n+1} as ℝ

\oplus ℝ^\bold{n}I’m cheating here, officially we are accessing

elements of A \oplus B by projection rather than pattern matching,

but the projection syntax is a bit noisy.

. Then, for example:

\begin{align*} &\mathrm{sum}_\bold{n} : \mathrm{U}ℝ^\bold{n} \to \mathrm{U}ℝ \\ &\mathrm{sum}_\bold{0}(v) :\equiv\partial r. 0 \\ &\mathrm{sum}_\bold{n+1} (v) :\equiv\mathsf{add}(\partial r. \mathsf{pr}_1(v(r)), \partial r. \mathrm{sum}_\bold{n}(\mathsf{pr}_2(v(r)))) \end{align*}

and

\begin{align*} &\mathrm{map}_\bold{n} : (\mathrm{U}ℝ \to \mathrm{U}ℝ) \to \mathrm{U}ℝ^\bold{n} \to \mathrm{U}ℝ^\bold{n} \\ &\mathrm{map}_\bold{0}(f, v) :\equiv\partial r. 0 \\ &\mathrm{map}_\bold{n+1}(f, v) :\equiv\partial r. \mathsf{cons}(\mathsf{pr}_1(v(r)) \oplus \mathrm{map}_\bold{n}(f, \partial r'. \mathsf{pr}_2(v(r')))(r)) \end{align*}

This kind of induction also lets us give an inverse to \mathsf{unexplode} above:

\begin{align*} &\mathsf{explode}_{\bold{n}} :: (ℝ^\bold{n} \multimap A) \multimap(ℝ^\bold{n} \otimes A) \\ &\mathsf{explode}_{\bold{0}}(h) :\equiv 0 \\ &\mathsf{explode}_{\bold{n+1}}(h) :\equiv(\mathsf{zero} \odot \mathsf{e}) \otimes h(\mathsf{zero} \odot \mathsf{e}) + (\iota \otimes \mathsf{id}_A)(\mathsf{explode}_{\bold{n}}(h \circ \iota)) \end{align*} where \iota :: ℝ^\bold{n} \multimap ℝ^\bold{n+1} is the inclusion \iota(i \odot e) :\equiv\mathsf{suc}(i) \odot e. Nonlinearly:

\begin{align*} &\mathrm{explode} : \mathrm{Vec}_{\mathbb{R}}(ℝ^\bold{n}, A) \to \mathrm{U}(ℝ^\bold{n} \otimes A) \\ &\mathrm{explode}(h)(\mathsf{e}) :\equiv\mathsf{explode}(\partial v. h(v)) \end{align*}

What does it look like to apply a nonlinear function along an axis? This can’t be done generically, in the sense of defining a map

\mathrm{map}_2 : (\mathrm{U}B \to \mathrm{U}C) \to \mathrm{U}(A \otimes B) \to \mathrm{U}(A \otimes C)

for an arbitrary linear type A, because the result really does

depend on a choice of basis for A. This is easiest to do if we first

take the inputs in unexploded form.Using X \odot ℝ makes clear

that this function does require us to pick a basis, but doesn’t

require the vector space to be finite dimensional.

\begin{align*} &\mathrm{along} : (\mathrm{U}B \to \mathrm{U}C) \to \mathrm{Vec}_{\mathbb{R}}(X \odot ℝ, B) \to \mathrm{Vec}_{\mathbb{R}}(X \odot ℝ, C) \\ &\mathrm{along}(f, h)(i \odot r) :\equiv f(\partial r'. h(i \odot r'))(r)\\ \end{align*}

Now, if we really want to define \mathsf{map}_2 as map on (the

underlying set of) the tensor type, we unexplode the matrix into a

homomorphism, compose, and then re-explode:And here, we see that the

final exploding step is the only place we actually need that

\mathbb{R}^\mathbf{q} is finite dimensional.

\begin{align*} \mathrm{U}(\mathbb{R}^\mathbf{q} \otimes B) \longrightarrow \mathrm{Vec}_{\mathbb{R}}(\mathbb{R}^\mathbf{q}, B) \longrightarrow \mathrm{Vec}_{\mathbb{R}}(\mathbb{R}^\mathbf{q}, C) \longrightarrow \mathrm{U}(\mathbb{R}^\mathbf{q} \otimes C) \end{align*}

So:

\begin{align*} &\mathrm{map}_2 : (\mathrm{U}B \to \mathrm{U}C) \to \mathrm{U}(\mathbb{R}^\mathbf{q} \otimes B) \to \mathrm{U}(\mathbb{R}^\mathbf{q} \otimes C) \\ &\mathrm{map}_2(f) :\equiv\mathrm{explode} \circ \mathrm{along}(f) \circ \mathrm{unexplode} \end{align*}

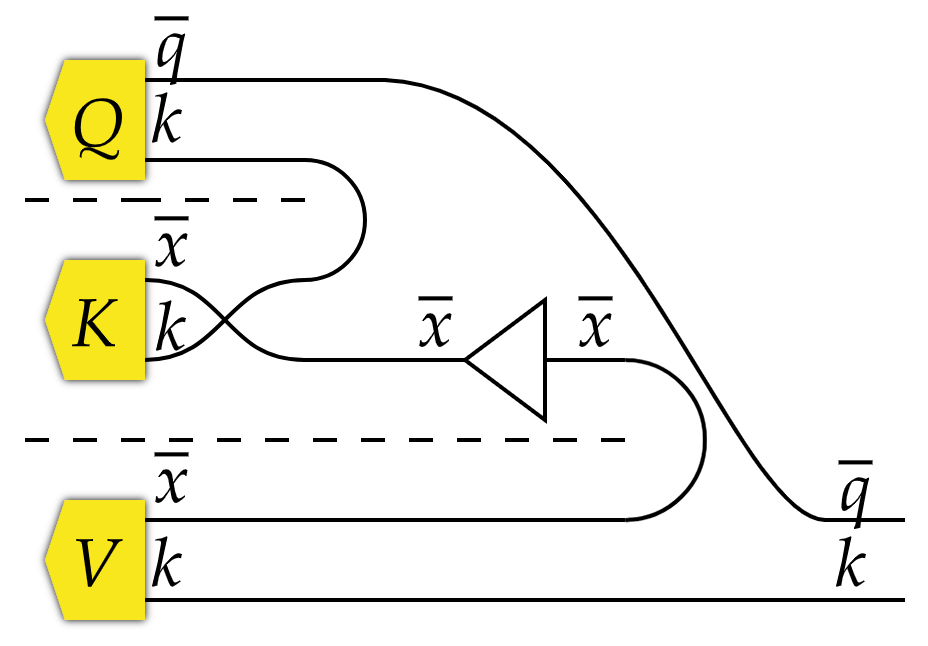

Attention

Let’s try to apply what we’ve learned so far to the most famous

linear/nonlinear map of ℝ-vector spaces in the world: the attention

mechanism. Here’s a string diagram of what we’re trying to replicate

in code, where the “caps” represent the \mathrm{dot} map from above,

and the triangular node represents \mathrm{softmax}:I find these kinds of

“string diagrams” quite confusing, because the interchange law fails

when mixing linear/nonlinear maps and the meaning of a diagram is not

invariant under isotopy. In other words, the relative horizontal

position of the nodes matters; even when the nodes are completely

disconnected from one other. Here, pulling the upper cap past the

\mathrm{softmax} results in a different map.

Of course, we can’t fuse this into a single linear map because it

involves the non-linear \mathrm{softmax}: \mathrm{U}ℝ^\bold{n} \to \mathrm{U}ℝ.We

could define \mathrm{softmax} ourselves using iteration, if we assume we

have \mathrm{exp} : \mathrm{U}ℝ \to \mathrm{U}ℝ and \mathrm{recip} : \mathrm{U}

ℝ \to \mathrm{U}ℝ, say.

Taken literally, that diagram is

\begin{align*} &\mathrm{attention} : \mathrm{U}(ℝ^\bold{q} \otimes ℝ^\bold{k}) \times \mathrm{U}(ℝ^\bold{x} \otimes ℝ^\bold{k}) \times \mathrm{U}(ℝ^\bold{x} \otimes ℝ^\bold{k}) \to \mathrm{U}(ℝ^\bold{q} \otimes ℝ^\bold{k}) \end{align*}

But we’re better just using \mathrm{Vec}_{\mathbb{R}} everywhere we can, and exploding things later if we really want:

\begin{align*} &\mathrm{attention} : \mathrm{Vec}_{\mathbb{R}}(ℝ^\bold{q}, K) \times \mathrm{Vec}_{\mathbb{R}}(K, X) \times \mathrm{Vec}_{\mathbb{R}}(X, K) \to \mathrm{Vec}_{\mathbb{R}}(ℝ^\bold{q}, K) \\ &\mathrm{attention}(Q, K, V) :\equiv V \circ \mathrm{along}(\mathrm{softmax})(K \circ Q) \end{align*}

This is the definition we get just clicking things together. If we inline all the definitions, it’s a bit more direct.

\begin{align*} &\mathrm{attention} : \mathrm{Vec}_{\mathbb{R}}(ℝ^\bold{q}, K) \times \mathrm{Vec}_{\mathbb{R}}(K, X) \times \mathrm{Vec}_{\mathbb{R}}(X, K) \to \mathrm{Vec}_{\mathbb{R}}(ℝ^\bold{q}, K) \\ &\mathrm{attention}(Q, K, V)(q \odot \mathsf{e}) :\equiv V(\mathrm{softmax}(\partial r. K(Q(q \odot r)))) \end{align*}

This is about as simple as we could hope for! It almost looks like we haven’t done anything at all, but this syntax tracks and enforces the correct interaction of the linear and nonlinear parts of the definition. I think this is a good place to stop for now.

Dependent Types

One probably wants a version of this that has dependent nonlinear types, and linear types that can depend on those nonlinear types (but not on other linear types). If nothing else, we need this to actually justify the way we used the finite types \bold{n}. For inspiration one should probably look at:

Related Work

There are a couple of similar existing bits of work. Closest is Jennifer Paykin’s thesis and some of the related papers.

The thesis demonstrates how to implement LNL as an embedded language inside a nonlinear host language like Haskell or Coq, and this looks like it works very well in practice. If I understand right, the focus is more on using the nonlinear language as a metalanguage for the linear language, rather than considering the whole system together as being semantically useful. This is also the case for the Quipper line of work. Her \mathtt{Box} constructor is like the external hom, which I here called \mathrm{Vec}_{\mathbb{R}}(-,-).

Then, there are the CHADs.

This is exciting work, and the part relevant to us is the

linear/nonlinear “target” language for their source transformation.

Unfortunately the linear context zone in this type theory is a

“stoup”, meaning that it contains exactly one linear variable.If

there’s some CHAD work that I missed which covers the properly linear

tensor product, please let me know!

As a result, that language is not

able to express the linear tensor product, which is something I

definitely want to have.

Separately, additivity and the \lambda-calculus has been considered in the following line of work:

The first paper considers additivity in the context of the ordinary lambda calculus, and the second considers additivity in the context of intuitionistic linear logic but with a dual-zone context structure different to ours. There are only linear types, with the “nonlinear” part of the context corresponding to linear types that have had the {!} comonad applied. Accordingly, there are no nonlinear type formers, like functions/products/coproducts etc.

There is also some work on modelling the lambda calculus directly in finite dimensional vector spaces over finite fields.

Here, the theory being modelled really is the ordinary non-linear lambda calculus without any linear features, though they do also consider additivity.

The purpose of both restricting to finite dimensional vector spaces and finite base fields is so that the comonad {!}:\equiv\mathsf{F} \mathrm{U} (in our notation) is strong monoidal: {!}(A \times B) \cong {!}A \otimes {!}B. This is not a problem for us, because our priority is matching the semantics in \mathbb{R}-vector spaces rather than matching the linear logic rules for !.